Apple silicon limitations with usage on local LLM

Maximizing LLM Usage on a 128GB M1 Ultra (Apple Silicon Mac Studio)

Understanding Unified Memory and the 96GB “VRAM” Limit

Apple’s M1 Ultra chip uses unified memory, meaning the CPU and GPU share the same 128 GB RAM pool. However, macOS does not allow the GPU to use all 128 GB for graphics/compute tasks by default. In practice, about 75% of the physical memory is the recommended maximum for GPU usage. This is why tools like Ollama (which uses Apple’s Metal Performance Shaders for acceleration) report roughly 96 GB as available “GPU memory” on a 128 GB system – the remaining 25% is reserved for the OS and CPU tasks.

In Apple’s Metal API, the property

`recommendedMaxWorkingSetSize <https://developer.apple.com/documentation/metal/mtldevice/%20recommendedmaxworkingsetsize>`__

reflects this limit. It’s essentially the largest memory footprint

the GPU can use “without affecting performance” (i.e. without causing

memory pressure or swaps). For a 128 GB Mac, this value comes out to

about 96 GB. (By comparison, a 64 GB Mac has ~48 GB usable for GPU, and

a 32 GB Mac only ~21–24 GB for GPU, which is ~65–75% of RAM.) In short,

Ollama showing 96 GB is not a bug – it’s reflecting an Apple-imposed

limit on how much unified memory the GPU backend (Metal/MPS) will

utilize.

Why is Only 75% of Memory Usable by the GPU?

This behavior appears to be a deliberate system design. Apple likely reserves a portion of unified memory to ensure the system remains responsive and to avoid GPU allocations evicting critical data needed by the OS or CPU. Apple’s documentation calls this a “recommended” working set size, but in practice it functions as a hard cap for GPU memory in user-space programs. Developers on Apple’s forums note that the limit is roughly 75% of physical RAM, and it’s hard-coded in current macOS drivers. Unfortunately, Apple hasn’t been very public about this constraint (leading some to call it “false advertising” when touting unified memory sizes).

Is this a Metal API limitation or Ollama’s fault? It’s fundamentally

a limitation of the Metal drivers / macOS GPU memory manager, not

something specific to Ollama. Any software using GPU acceleration on

macOS (be it Ollama’s ggml/Metal backend, PyTorch with MPS, TensorFlow

Metal, etc.) will see a similar ceiling. Ollama is built on

llama.cpp with Metal support, so it inherits the same constraint. In

other words, by default no single process can use the full 128 GB for

GPU computations – about 32 GB will be left unused by the GPU (though

the CPU can still use that portion for other things).

Apple’s own guidance to developers suggests breaking up tasks to fit within the recommended working set or stream data in/out as needed. For LLMs, however, the entire model often needs to reside in memory for fast inference, so “just split the job” isn’t straightforward. If an LLM model exceeds the GPU allotment, one of two things typically happens:

Partial CPU Offload: Frameworks may load whatever fits on the GPU and offload the remainder to CPU. This allows the model to run, but slows performance since the CPU is much slower for matrix multiplies. (For example, on Apple M2 Max, GPU inference for a 65B model was nearly 2× faster than CPU inference. So keeping as much on the GPU as possible is key to performance.) In Ollama/llama.cpp, if your model is slightly too large for GPU memory, you might notice the last few layers or the KV cache running on CPU, causing a slowdown in token generation speed.

Out-of-Memory Error or Eviction: If the model far exceeds the GPU limit, you may simply get an error or the process will use macOS virtual memory (disk swap), leading to severe slowdowns. MacOS does not automatically “expand” the GPU memory beyond the recommended limit, so an oversized model can fail to load under Metal. For instance, a user found that a LLaMA2 70B 6-bit model (~52.5 GB) could not fully load on a 64 GB Mac until they reduced the GPU use or split some of it to CPU, since only ~48 GB was available to the GPU by default.

In summary, the 96 GB cap on a 128 GB M1 Ultra is a safety mechanism in macOS’s Metal driver. It’s not easily changed via normal settings, and it affects all apps using the GPU. Next, we’ll explore how you can override or work around this limit to better utilize your high-memory Mac for local LLMs.

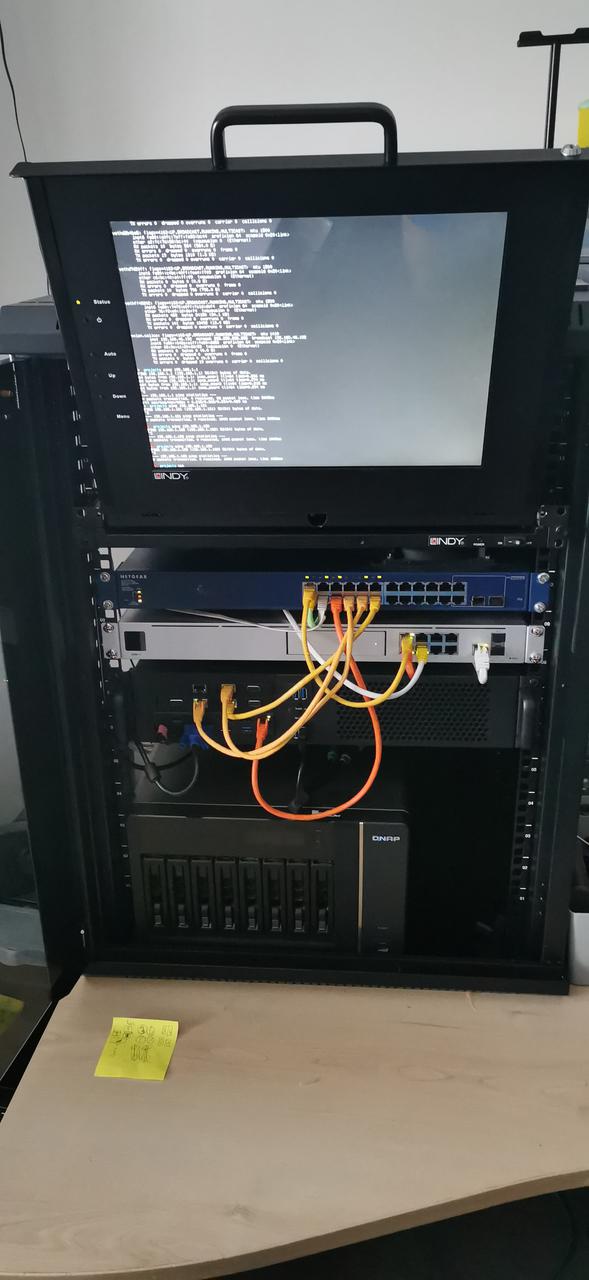

Increasing GPU Memory Utilization (Beyond the 96 GB Default)

While Apple doesn’t provide an official toggle to use more of the unified memory, advanced users have discovered a way to manually raise the GPU memory limit on Apple Silicon Macs. This involves using a ``sysctl`` command to adjust a hidden kernel parameter. Use these tweaks at your own risk: they are not officially supported by Apple (and may require disabling System Integrity Protection in some cases).

How to override the VRAM limit on macOS:

Determine a safe memory split. Decide how much RAM to allocate to GPU tasks vs. leave for the OS/CPU. It’s wise not to give 100% of RAM to the GPU, or you risk starving the OS. A common recommendation is to leave at least 8–16 GB for the system. For a 128 GB Mac Studio, you might aim to allocate around 120 GB to the GPU and keep ~8 GB for the OS. (120 GB is 93.75% of 128 GB – users have reported success with this, effectively raising the limit from 96 GB to 120 GB.)

-

Run the ``sysctl`` command to set the new limit. Open the Terminal app and enter:

Replace

<desired_MB>with the amount of memory (in MB) you want the GPU to be allowed to use. For example, to set ~120 GB, useiogpu.wired_limit_mb=122880(since 120×1024 = 122880 MB). For 96 GB (the default on 128), it would be 98304, and for 128 GB (not recommended), 131072. On macOS Sonoma (14.x) and later, use theiogpu.wired_limit_mbkey. (On older macOS versions (e.g. Ventura or Monterey), the key was named ``debug.iogpu.wired_limit`` and took a value in bytes. Sonoma simplified it to MB and changed the name.)After running the command with

sudo, enter your password if prompted. No reboot is required – the setting takes effect immediately. For example, one user with a 64 GB M1 Max ransudo sysctl iogpu.wired_limit_mb=57344(56 GB) and saw the MetalrecommendedMaxWorkingSetSizejump accordingly in the logs, allowing the GPU to use 56 GB instead of the default ~48 GB. -

Verify the new GPU memory availability. You can check that the limit changed by looking at your LLM application’s logs or using system tools:

In Ollama, run a model and then check the Ollama server log. You should see a line like

ggml_metal_init: recommendedMaxWorkingSetSize = XXXX MB. After the sysctl tweak, this number should reflect your new setting (e.g. ~120000 MB) rather than the old ~98304 MB.You can also use

sysctl iogpu.wired_limit_mbwithout=to read the current value and confirm it’s set.The

ollama pscommand will show the GPU memory usage for a loaded model (e.g. “25 GB – 100% GPU” for a 25 GB model). After raising the limit, you’ll be able to load larger models before that hits 100%.

-

(Optional) Persist the setting across reboots. By default, the change made by

sysctlwill reset on reboot (the parameter goes back to0, which means “use default 75%”). If you want this change to apply automatically at startup, you can add the setting to ``/etc/sysctl.conf`` (create the file if it doesn’t exist) in the format:iogpu.wired_limit_mb=<desired_MB>

Keep in mind that to modify this file, you might need to disable System Integrity Protection (SIP) on your Mac, since altering certain kernel parameters permanently is restricted. Many users simply re-run the

sudo sysctlcommand when needed, to avoid disabling SIP. Given that it’s a one-liner and only needed when you plan to do heavy ML work, manually setting it is usually fine. (If you do add it to sysctl.conf, and later want to revert to defaults, remove the line and reboot, or set it back to 0 which tells macOS to use the default 75% limit.)

Important cautions: Pushing the GPU memory limit closer to 100% of RAM can cause instability if macOS or other apps suddenly need more memory. Monitor Memory Pressure in Activity Monitor – if it stays green while running your model, you’re okay; if it goes red or the system starts swapping, consider dialing back the GPU allocation. In practice, leaving a buffer for the OS (and for things like the LLM’s CPU-based components) is crucial. Many folks find leaving ~8–16 GB for system use keeps things stable even under heavy load.

Other Workflows to Maximize Hardware Utilization

Aside from raising the Metal memory cap, here are additional tips and configurations to fully leverage a 128 GB M1 Ultra for local LLM inference:

Use Quantized Models to Fit More in Memory: Take advantage of quantization (4-bit, 5-bit, or 8-bit) so that large models consume less memory. For example, LLaMA-2 70B in 4-bit precision might use ~35–40 GB, which easily fits in the 96 GB GPU limit (even leaving room for a large context window). With 128 GB total, you could even load a 70B model at 8-bit (~70–80 GB) entirely in GPU memory after the sysctl tweak (since 8-bit 70B is too large for 96 GB but could fit in ~120 GB). Smaller quantization not only saves memory but also reduces memory bandwidth usage, often improving throughput. Note: Apple’s MPS backend (used by Ollama/llama.cpp) supports 4-bit and 5-bit quantization via the ggml library, but very high quantization (2-bit) may not be supported or may degrade quality significantly. Choose the smallest model precision that still gives you acceptable accuracy.

Optimize Context Length vs. Speed: If you increase the context length (the token window for prompts/history), be aware it linearly increases memory usage for the model’s KV cache. A longer context can cause the GPU memory usage to balloon and potentially exceed the limit, forcing a fallback to CPU memory (which will slow down generation). For example, going from 2048 tokens to 8192 tokens context will roughly 4× the memory required for the KV cache. If you find that long conversations slow down on your model, it might be because you’ve exceeded the GPU memory and spilled into CPU RAM. To max out performance, try to keep context length within what your GPU portion can handle. You can monitor this via

ollama ps(it shows how much VRAM the model + context is using). If you need ultra-long contexts, consider the memory tweak above, or use a smaller model that leaves headroom for the KV cache.Leverage CPU RAM for Overflow (Hybrid Offloading): The nice thing about unified memory is that even when you hit the GPU’s recommended limit, the remainder of the model can reside in normal RAM. Frameworks like llama.cpp will automatically use CPU for the parts that don’t fit on GPU. While pure GPU use is fastest, this hybrid approach means you can technically load models larger than 96 GB – up to the full 128 GB – but with some of the work handled by CPU. If a model is just slightly over the GPU limit, this is efficient: e.g., a 75 GB model on a 128 GB Mac might run 96 GB on GPU and the other ~-20 GB on CPU. It will be slower than fully on-GPU, but still functional. Note: Ollama doesn’t currently expose a manual setting for “GPU layers” vs “CPU layers”, but llama.cpp’s CLI does (the

-nglflag specifies number of GPU layers to offload). In practice, just attempt to load the model – if it’s over budget, the system will offload automatically. If performance is too slow, you may need to choose a smaller model or reduce context.CPU-Only Inference as a Fallback: In cases where GPU acceleration isn’t working (e.g., a model with unsupported operations on MPS, or if you want to use vLLM which currently lacks MPS support), you can run on the 20-core CPU of the M1 Ultra. The CPU can use the entire 128 GB for the model, no 75% restriction. The downside is speed: CPU inference is much slower for large models. Still, for certain large models that simply cannot fit in 96 GB (even quantized), CPU mode is an option. Ollama does not have a simple switch for CPU-only, but you could use the underlying

llama.cppcompiled without Metal, or another library’s CPU path. Expect that a 70B model on CPU will be several times slower than on the Apple GPU (e.g., <1 token/sec in worst cases). This is really a last resort if GPU memory is exhausted or if using a backend like vLLM which, as of 2025, runs on CPU on Macs.Stay Updated with Apple’s Tools: Apple is actively improving their machine learning support on Mac. Ensure you’re on the latest macOS (newer versions sometimes raise or optimize memory limits) and using the latest version of Ollama/llama.cpp. For instance, macOS updates have reportedly adjusted the usable GPU memory fraction for some configs (some older systems saw ~65% use, newer ones 75%). Likewise,

llama.cppand Ollama are rapidly evolving – newer releases might have better memory management, faster Metal kernels, or support for the Apple Neural Engine (ANE) if Apple ever opens it up. Keeping these updated will help maximize performance.High-Power Mode and Cooling (if applicable): On a Mac Studio, you don’t have “Turbo” or “High Power” mode like MacBook Pros do, but you should still ensure the machine has adequate cooling (the Mac Studio’s fans should ramp up under load – make sure they’re not obstructed or overly dusty). The M1 Ultra will thermal throttle if it somehow overheats (less likely in a desktop chassis, but worth noting for sustained jobs). A cool environment can sustain peak GPU frequency for longer, which helps throughput.

Parallelism and Batch Inference: If you’re serving an LLM with Ollama (which acts as a local API server), you might handle multiple requests or streams at once. The M1 Ultra has 20 CPU cores (16 performance + 4 efficiency) and a 64-core GPU, which can handle some parallel work. However, single large model inference is mostly bound by the GPU compute for each token. You won’t easily get more tokens/sec by running two queries concurrently – they’ll just share resources. For maximum single-query performance, focus on one model at a time. If you need to serve multiple users or models, consider running one model on GPU and another on CPU (or using two separate Mac machines), to avoid contention. Also, when running a generation, try to avoid other heavy GPU tasks (don’t, say, be gaming or running a video export) which could also contend for that 96 GB GPU allocation.

Use Efficient Serving Backends: Tools like vLLM, TGI (Text Generation Inference), or others can optimize prompt handling and token outputs for throughput. On Mac these may run in CPU mode (since vLLM doesn’t yet use Metal), but they can still leverage multi-threading and batch prompts together. If your goal is to maximize token output throughput for many prompts, a specialized server like vLLM might offer better efficiency in how it uses the CPU cache and memory. (For example, vLLM is designed to reuse KV cache across requests to avoid re-computation, which can be beneficial even on CPU.) Just note that any CPU-based approach will be slower per token than GPU-based inference.

Conclusion and Further Reading

In summary, the reason your M1 Ultra shows only ~96 GB for LLMs is due to Apple’s Metal memory management, which by default caps GPU memory usage to about 75% of unified RAM. This is a system-level safeguard, not a flaw in Ollama. To fully utilize your 128 GB machine for large models, you can apply the ``sysctl`` tweak to raise the limit (e.g. up to ~120 GB GPU use), and employ other strategies like quantization and careful context length management to stay within budget. With these adjustments, a Mac Studio M1 Ultra becomes a very capable box for local LLM inference – able to run models in the 70B parameter class (and beyond, with quantization) entirely from memory, something traditional GPUs (with fixed VRAM) often cannot do.

For more details and community discussions, you may find the following resources helpful:

Apple Developer Forums – “recommendedMaxWorkingSetSize – is there a way to use all our unified memory for GPU?” (discussion of the 75% limit and Apple’s stance).

GitHub: llama.cpp Issue #1870 – GPU Memory problem on Apple M2 Max 64GB (users report the 75% limit and share performance observations).

Peddal’s Blog – Optimizing VRAM Settings for Local LLM on macOS (step-by-step guide to using

sudo sysctl iogpu.wired_limit_mbto increase usable VRAM, with examples).Reddit: r/LocalLLaMA – various threads (e.g. users running 70B on 96GB Macs, etc.) where the 75% memory issue is discussed and the

iogpu.wired_limitworkaround is shared. (Look for keywords “VRAM 75%” or “iogpu.wired_limit” in those discussions.)Hacker News: High-RAM Apple Silicon for large models? – contains insights into Apple GPU vs NVIDIA, and limitations of the Metal backend for ML.

Ollama’s documentation – for any settings related to performance, and llama.cpp’s README for tips on Metal and thread tuning.

By combining these techniques, you should be able to max out your Mac Studio’s capabilities, running cutting-edge LLMs locally with as much of that 128 GB put to work as possible. Good luck, and happy experimenting with your local AI models!

Buying GPU for local models (llm)

Buying GPU for Running Large Parameter Models (LLMs) on Your Local Machine

Introduction and Motivation

Running large language models locally comes with one major challenge: memory. I learned this firsthand using an NVIDIA RTX 4090 with 24 GB of VRAM. While the 4090’s raw performance is stellar, its limited VRAM quickly became a bottleneck when attempting to run models beyond roughly 30 billion parameters – the largest models simply wouldn’t fit in memory, leading to crashes or severe slowdowns. In other words, memory size is key – even more important than memory speed or GPU compute power when it comes to huge LLMs.

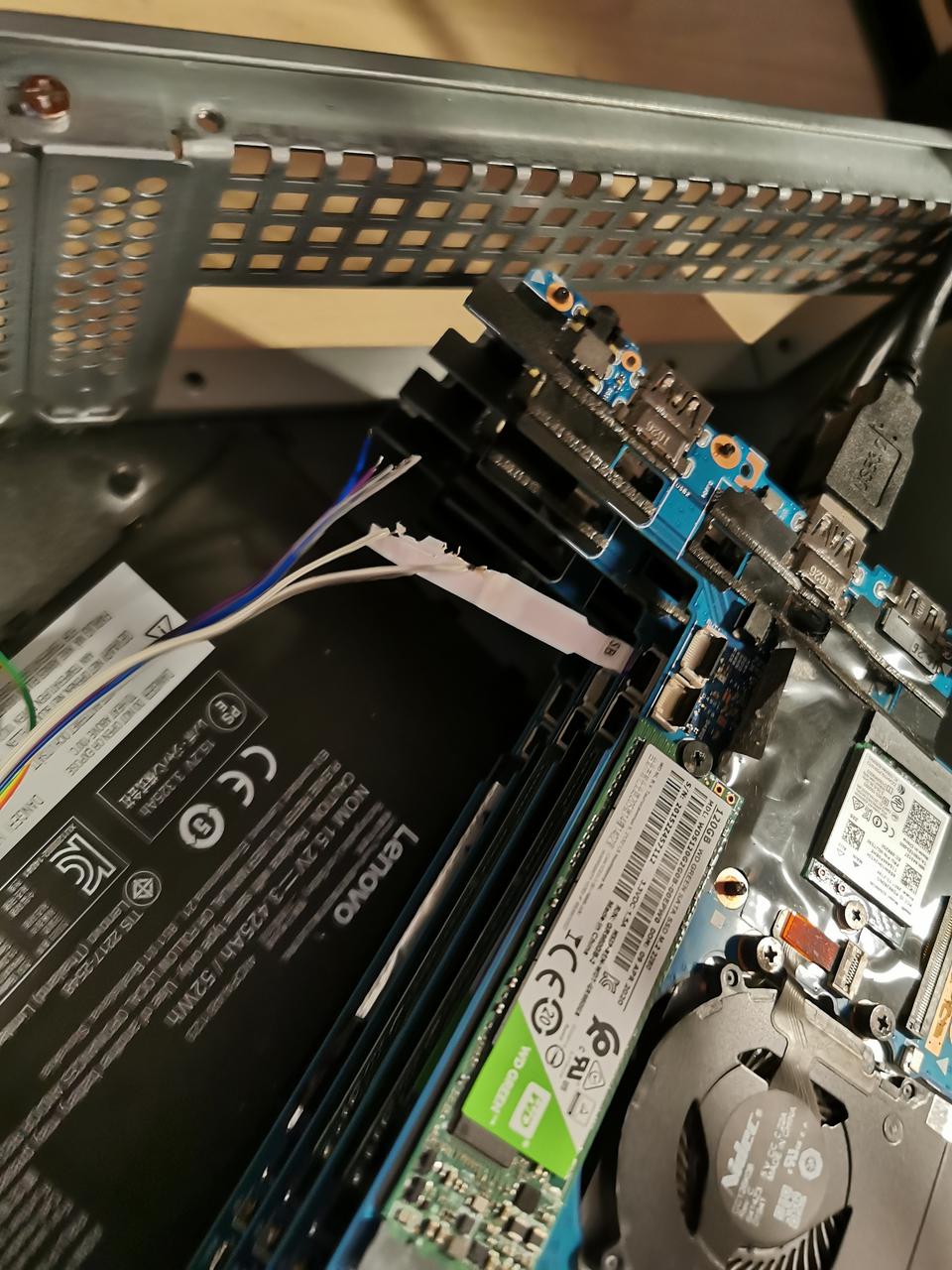

This realization led me to explore alternatives that offer more memory per dollar. I discovered that Apple Silicon, specifically the Apple M1 Ultra system-on-chip with 128 GB of unified memory, provides an excellent GB/$ ratio for local AI work. Unlike a discrete GPU, Apple’s unified memory architecture lets the GPU dynamically use a large pool of system memory at very high bandwidth (around 800 GB/s). Practically, this meant I could load models that a 24 GB GPU could not. For example, the DeepSeek R1 70B model (70 billion parameters) requires roughly 43–48 GB of memory even in quantized form – well above the 4090’s VRAM. On the M1 Ultra (128 GB), I was able to run DeepSeek 70B locally by using 5-bit quantization to reduce the model size to around 43 GB, fitting comfortably in unified memory. The high memory bandwidth helps feed the model data to the GPU cores efficiently, minimizing slowdowns. This was a revelatory experience: a compact desktop (Mac Studio with M1 Ultra) could handle a 70B model that would normally require multi-GPU servers.

It’s worth noting this experiment took place before Apple’s M3 Ultra chip was released. The M1 Ultra proved the value of massive memory capacity for LLMs. Since then, newer hardware (like M3 Ultra and upcoming NVIDIA GPUs) has further shifted the landscape by offering even more memory or bandwidth. In the remainder of this article, we’ll compare several consumer-grade hardware options for running large LLMs locally, focusing on memory capacity, bandwidth, quantization support, model size limits, performance (measured in tokens per second), power efficiency, and cost effectiveness. We’ll also mention which popular models (such as LLaMA, DeepSeek, Mistral, Mixtral, etc.) can be run on each platform given these constraints.

Key Considerations for Local LLM Hardware

Before diving into hardware specifics, it’s important to understand the factors that determine whether a given system can run a large LLM:

Memory Capacity (VRAM or Unified RAM): Large models demand tens or hundreds of gigabytes of memory. For example, a 65B parameter model in 16-bit floating point requires roughly 130 GB, and even 4-bit quantized versions can be 30–40 GB in size. If the model doesn’t fully fit in GPU memory, it must be offloaded to CPU RAM—which dramatically hurts throughput due to slower data transfer speeds. Apple’s unified memory allows using up to 128–192 GB (or more on newer systems) seamlessly for GPU tasks, whereas PC GPUs are currently limited to 24 GB (4090) or 48 GB (professional cards) per card. Having enough memory is the primary requirement to avoid performance issues.

Memory Bandwidth: Once a model fits, how quickly the GPU can access model data influences token generation speed. For instance, the NVIDIA RTX 4090 delivers about 1000 GB/s bandwidth from its VRAM, whereas Apple’s M1, M2, and M3 Ultra provide around 800 GB/s. Higher bandwidth means the model’s parameters and activations can be moved faster, which boosts tokens per second. (By comparison, CPU memory bandwidth is much lower, which is why pure CPU inference is significantly slower.)

Quantization and Model Precision: Running models at lower precision (such as int4/int5 via GGML or GGUF quantization) is a game-changer for local inference. Quantization can reduce a model’s size by 2–4× with minimal quality loss, enabling larger models to fit into limited memory. For example, LLaMA2 70B in 4-bit mode is roughly 35–40 GB—a size that could fit on a 48 GB GPU or in unified memory—whereas the full 16-bit model would be approximately 140 GB. While all the hardware options discussed can run quantized models, not all can handle full-precision models. In each section below, the maximum model size possible in full precision is noted where applicable.

Compute Throughput (GPU Cores/Tensor Cores): Once memory is sufficient, the raw speed of the GPU or accelerator determines how many tokens per second you can generate. NVIDIA’s GPUs excel with thousands of CUDA and Tensor cores, whereas Apple’s GPUs have fewer compute units but aim to compensate with efficiency. A high-end NVIDIA card may generate tokens faster on a given model, but if VRAM is insufficient, it may need to use a smaller quantization scheme or offload to CPU, affecting speed.

Power Efficiency: For users planning continuous inference or using a personal workstation, power consumption is important. A PC with a 4090 may draw 500–600 W under load, while Apple’s SoCs draw a fraction of that—often around 150–200 W for the entire system. Lower power usage can lead to long-term savings, less heat, and quieter operation.

Cost (Price per GB and Tokens per Dollar): Considering the high cost of consumer GPUs and Apple systems, it’s useful to compare cost effectiveness. For example, the M1 Ultra with 128 GB of unified memory offers a lower cost per GB than a single NVIDIA RTX 4090 (which has only 24 GB). However, if you are only working with models that fit in 24 GB, you may achieve higher tokens per second per dollar with an NVIDIA card. The trade-offs are clear: choose based on whether raw speed or maximum memory capacity is more important for your specific LLM use cases.

Comparative Hardware Options for Local LLMs

In this section, we compare five consumer-level hardware setups for running large LLMs locally:

NVIDIA GeForce RTX 4090 (24 GB GDDR6X VRAM) – Current generation flagship GPU.

NVIDIA GeForce RTX 5090 (32 GB GDDR7 VRAM, upcoming) – Next-generation flagship (based on leaked specifications).

Apple M1 Ultra (128 GB Unified Memory) – Apple Silicon (2022) with unified CPU/GPU memory.

Apple M2 Ultra (192 GB Unified Memory) – Updated Apple Silicon (2023) with increased cores and memory.

Apple M3 Ultra (up to 512 GB Unified Memory) – Latest high-end Apple Silicon (2025).

For each, the discussion below covers memory capacity and bandwidth, ability to run quantized versus full-precision models, performance (tokens per second), power efficiency, cost, and example model sizes that run well.

### NVIDIA RTX 4090 (24 GB VRAM)

The RTX 4090 is a powerhouse GPU based on NVIDIA’s Ada Lovelace architecture. It features 16,384 CUDA cores and 24 GB of GDDR6X VRAM on a 384-bit bus, delivering approximately 1000 GB/s of memory bandwidth. Its strength is in raw throughput: for models that fit in 24 GB, the 4090 can generate tokens very quickly. For example, running a 7–13B parameter model (such as LLaMA-13B) with 4-bit quantization can yield generation speeds in the range of 100–130 tokens per second. Even a 30B model (when quantized) can run at high speed on this card.

However, 24 GB of VRAM is insufficient for the largest models. A 65B model quantized to 4-bit (approximately 35–40 GB) cannot fit entirely in a single 4090’s memory. Workarounds include enabling CPU offloading, but that results in significant slowdowns as the GPU waits for data from system RAM. As a result, the RTX 4090 excels for models up to about 30B but is limited when it comes to 65B–70B models (though these can run with compromises).

On the plus side, the RTX 4090 is well supported by frameworks such as exllama and TensorRT. With the right optimizations, users have achieved around 30–35 tokens per second even on a 70B model by streaming weights from the CPU. In summary, the 4090 offers excellent performance per dollar for midsize LLMs, but its 24 GB VRAM creates an upper limit on model size.

### NVIDIA RTX 5090 (Leaked Next-Gen, 32 GB VRAM)

While NVIDIA has not officially released the RTX 5090, credible leaks suggest it will feature 32 GB of GDDR7 VRAM on a widened 512-bit bus, with a memory bandwidth of roughly 1.8 TB/s. This upgrade will increase the maximum model size that can reside entirely on the GPU. Although a 70B model quantized to 4-bit (around 35–40 GB) may still push the limits, the increased VRAM makes it more feasible to handle larger or moderately quantized models with minimal offloading.

Performance improvements are expected from increased core counts and higher bandwidth. Extrapolations indicate token throughput could be 1.5–2× that of the 4090 on models that fit entirely on GPU, meaning significantly faster speeds on midsize models and improved performance on larger ones when partial offloading is needed. However, the RTX 5090 is also anticipated to come with higher cost and power consumption, making it a premium choice for enthusiasts willing to pay for cutting-edge performance.

### Apple M1 Ultra (128 GB Unified Memory)

The Apple M1 Ultra is an entirely different approach. Rather than a discrete GPU, it is a system-on-chip (SoC) that combines CPU, GPU, and memory into one package. With up to 128 GB of unified memory available to both CPU and GPU, and a memory bandwidth of around 800 GB/s, the M1 Ultra offers an outstanding memory capacity that allows very large models to be loaded entirely on device.

With this memory pool, models like LLaMA2-70B in 4-bit (about 35–40 GB) or even 8-bit quantized models (70–80 GB) can be loaded without needing to offload portions to the CPU. Although the integrated GPU in the M1 Ultra is not as fast as high-end NVIDIA offerings (with a 70B model running at around 8–12 tokens/sec compared to higher speeds on a 4090), the system shines in its energy efficiency and simplicity. A Mac Studio built with M1 Ultra typically draws only about 150 W under load, in contrast to the much higher power draws of high-end discrete GPUs.

In cost terms, while the system price of around $5,000 is premium, the cost per gigabyte of memory is very competitive compared to building a multi-GPU PC.

### Apple M2 Ultra (192 GB Unified Memory)

The Apple M2 Ultra (2023) builds on the strengths of the M1 Ultra. It can be configured with up to 192 GB of unified memory and features a GPU with up to 76 cores. While the memory bandwidth remains at about 800 GB/s, the M2 Ultra’s more powerful GPU improves performance—making it roughly 20–30% faster than the M1 Ultra on many tasks.

The increased memory capacity allows for even larger models or larger contexts, such as loading a full LLaMA2-70B in FP16 (if carefully optimized) or running two large models simultaneously. Although the token generation speed on very large models is still limited (around 36 tokens/sec on a 13B model, for instance), the M2 Ultra represents the upper limit of single-machine LLM hosting for many real-world applications, while consuming far less power than an equivalent NVIDIA system.

### Apple M3 Ultra (512 GB Unified Memory, 2025)

The latest in Apple’s lineup, the M3 Ultra, takes things to a new extreme. With configurations available up to 512 GB of unified memory and an 80-core GPU, it is designed for tasks that require massive memory capacity. Although the memory bandwidth remains similar at roughly 800 GB/s, the overall architectural improvements and the sheer number of GPU cores significantly increase compute performance.

For LLM practitioners, the 512 GB option means that virtually any open-source model available—even future models with hundreds of billions of parameters—can be loaded on a single machine. In practice, this allows researchers to experiment with extremely large models, long context lengths, or even load multiple models concurrently. While the price for such configurations can be well above $10,000, the balance of extreme memory, acceptable compute speeds, and exceptional power efficiency makes the M3 Ultra an attractive platform for those who need uncompromised model size within a desktop environment.

Comparative Summary

Below is a summary table comparing these hardware options:

Hardware |

Memory |

Bandwidth |

Power (TDP) |

Approx Price |

|---|---|---|---|---|

NVIDIA RTX 4090 |

24 GB GDDR6X |

~1000 GB/s |

450 W |

~$1,600 (GPU only) |

NVIDIA RTX 5090 |

32 GB GDDR7 |

~1.8 TB/s |

~600 W (est.) |

~$2,000 (est.) |

Apple M1 Ultra |

64–128 GB Unified |

~800 GB/s |

~90 W (chip) |

~$5,000 (system) |

Apple M2 Ultra |

64–192 GB Unified |

~800 GB/s |

~90 W (chip) |

~$4,000–$7,000 (system) |

Apple M3 Ultra |

96–512 GB Unified |

800+ GB/s |

~150 W (est.) |

~$5,000–$10,000+ (system) |

Note: Prices for NVIDIA GPUs are for the card only, while Apple system prices reflect full workstation configurations. Bandwidth and power for the RTX 5090 and M3 Ultra are based on early leaks and estimates.

A Few Key Observations

Memory per Dollar: Apple’s offerings provide a tremendous amount of memory relative to their cost. For users whose primary goal is to run very large models, a Mac Studio with 128–192 GB (or even 512 GB) of unified memory can be more cost-effective than assembling a multi-GPU system to achieve similar memory capacity.

Raw Performance: NVIDIA GPUs lead in raw token generation throughput when the model fits entirely in VRAM. For models that do, the RTX 4090 (and the upcoming RTX 5090) offer faster response times. However, for extremely large models that exceed discrete GPU VRAM, performance may be compromised due to CPU offloading, at which point Apple Silicon’s unified memory systems offer a distinct advantage.

Scalability: NVIDIA’s multi-GPU configurations can further boost performance, although the complexity and power requirements rise. Apple’s integrated systems, while excellent in memory capacity, do not currently support multi-unit scaling in the same manner.

Power and Efficiency: Apple’s SoCs are far more power-efficient than high-end discrete GPUs. This is an important consideration for 24/7 inference tasks or when operating in thermally constrained environments.

Use Cases: For chat, coding, and inference tasks on models up to around 30B parameters, the NVIDIA RTX 4090 (or 5090) is an excellent choice. For models in the 65B–70B range or if absolute memory capacity is required for long-context or multiple simultaneous models, Apple’s M1, M2, and M3 Ultra systems offer a superior value proposition.

Conclusion

By understanding the memory requirements of large LLMs and the strengths of each hardware option, you can make an informed decision on the GPU (or SoC) to invest in for local AI work. Whether you choose the brute-force approach of a next-gen NVIDIA GPU or the memory-rich, energy-efficient approach of Apple Silicon, it’s an exciting time—the ability to run models that once required multi-GPU servers is now within reach on a desktop machine.

References

[llama.cpp Discussions on GitHub](https://github.com/ggml-org/llama.cpp/discussions/4167)

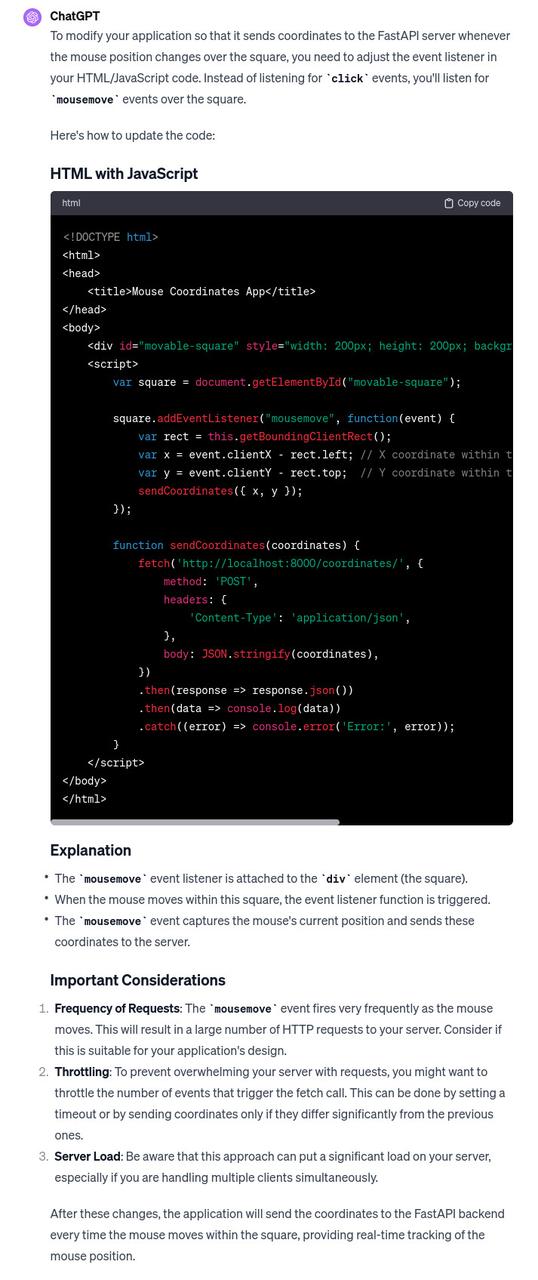

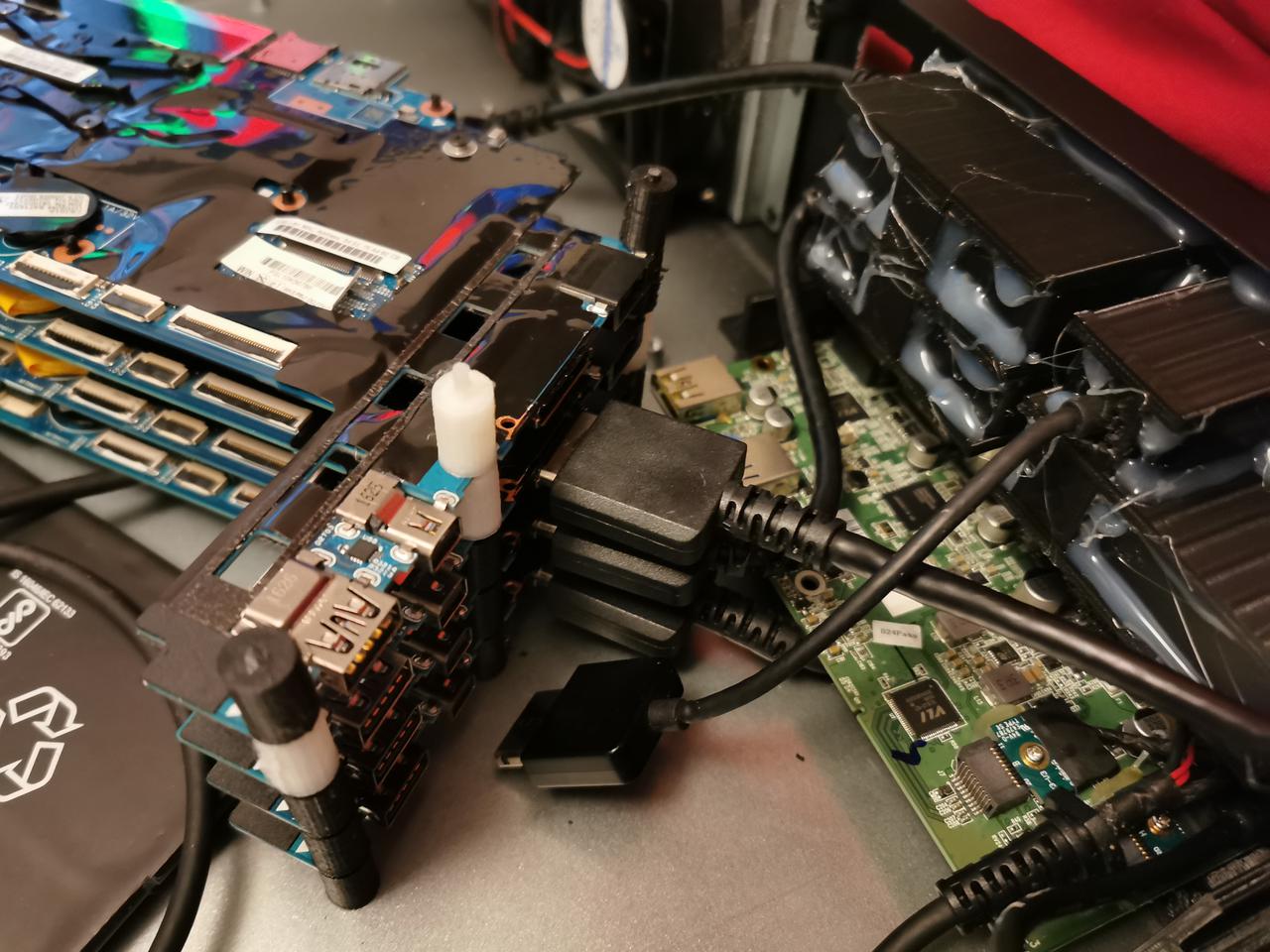

Building an Intelligent Nerf Turret with Jetson Nano: A Journey into DIY Robotics and AI

Introduction

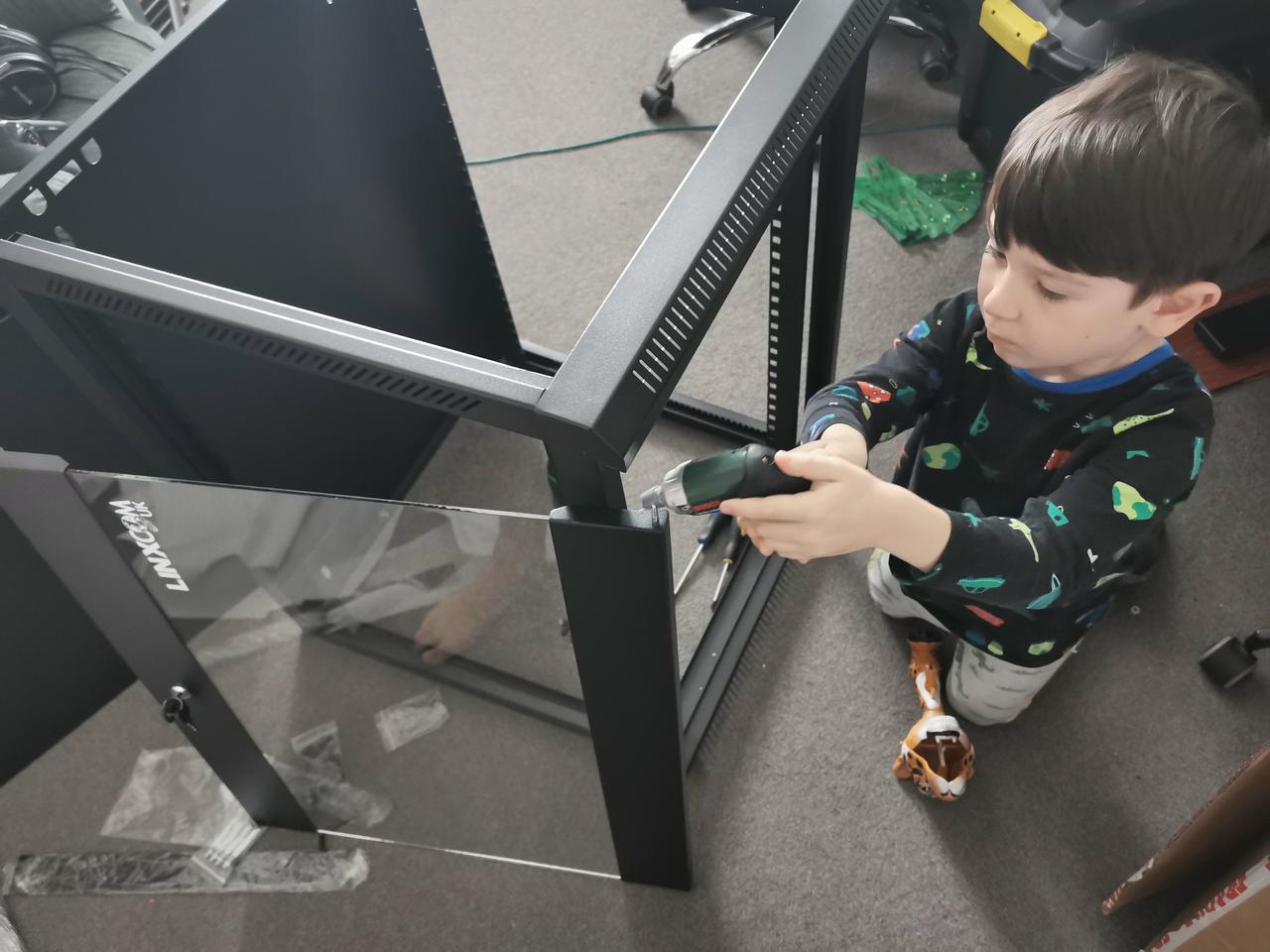

Imagine transforming a simple toy into an intelligent machine that recognises and interacts with its environment. That's exactly what I set out to do with a Nerf turret. The journey began with a desire to create something fun and educational for my son and me, and it evolved into an exploration of open-source hardware, machine learning, and robotics.

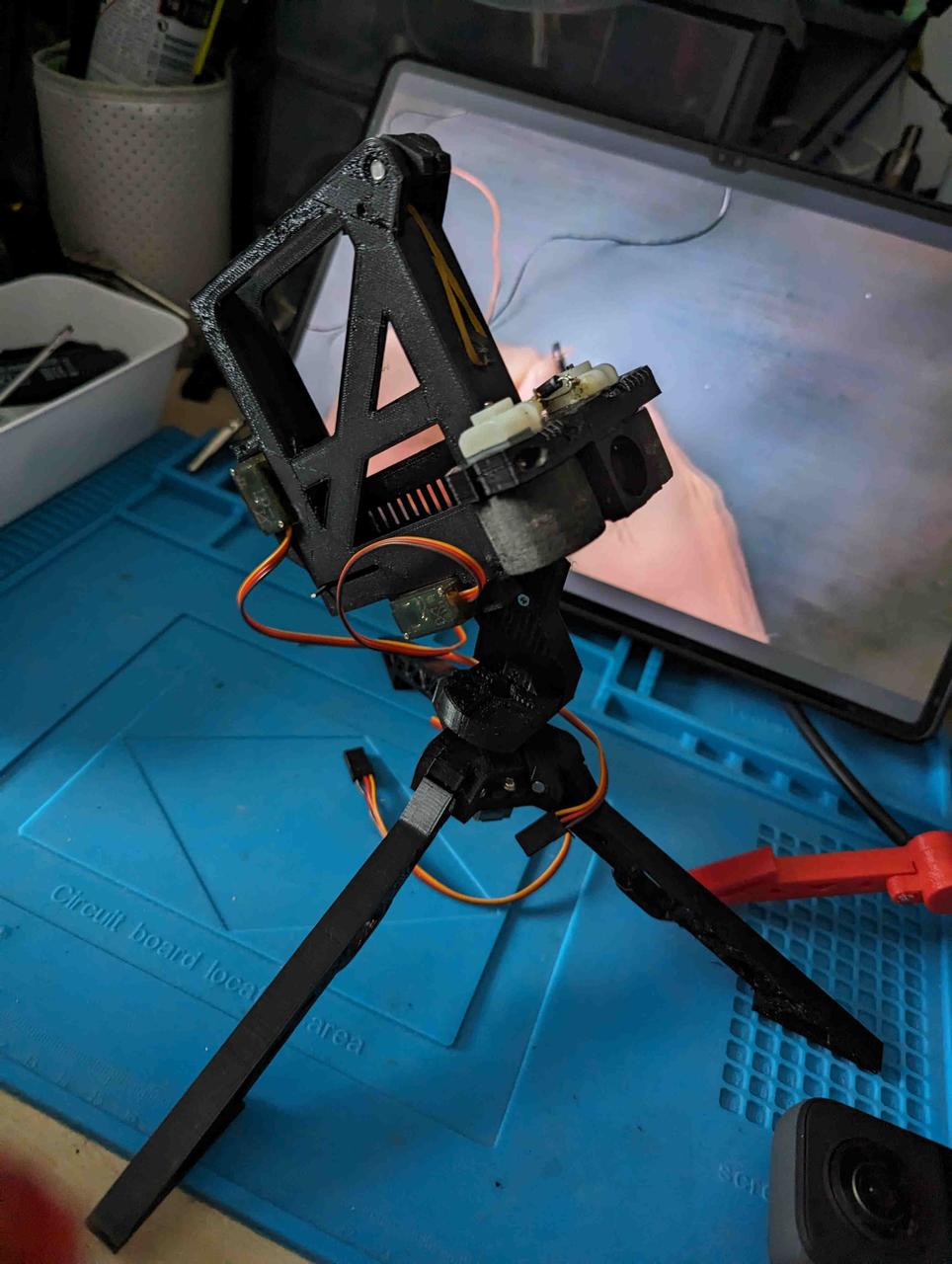

The Beginning: Assembling the Turret

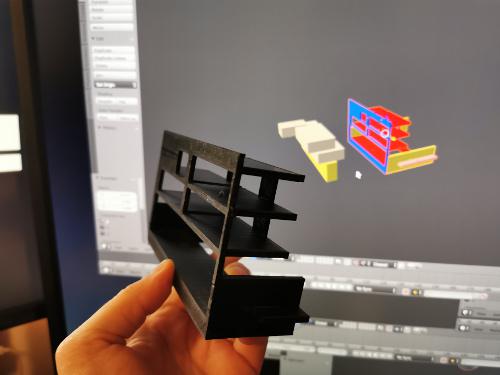

The project started with an open-source Nerf turret design

https://www.littlefrenchkev.com/bluetooth-nerf-turret

I printed all the parts using a 3D printer and got to work.

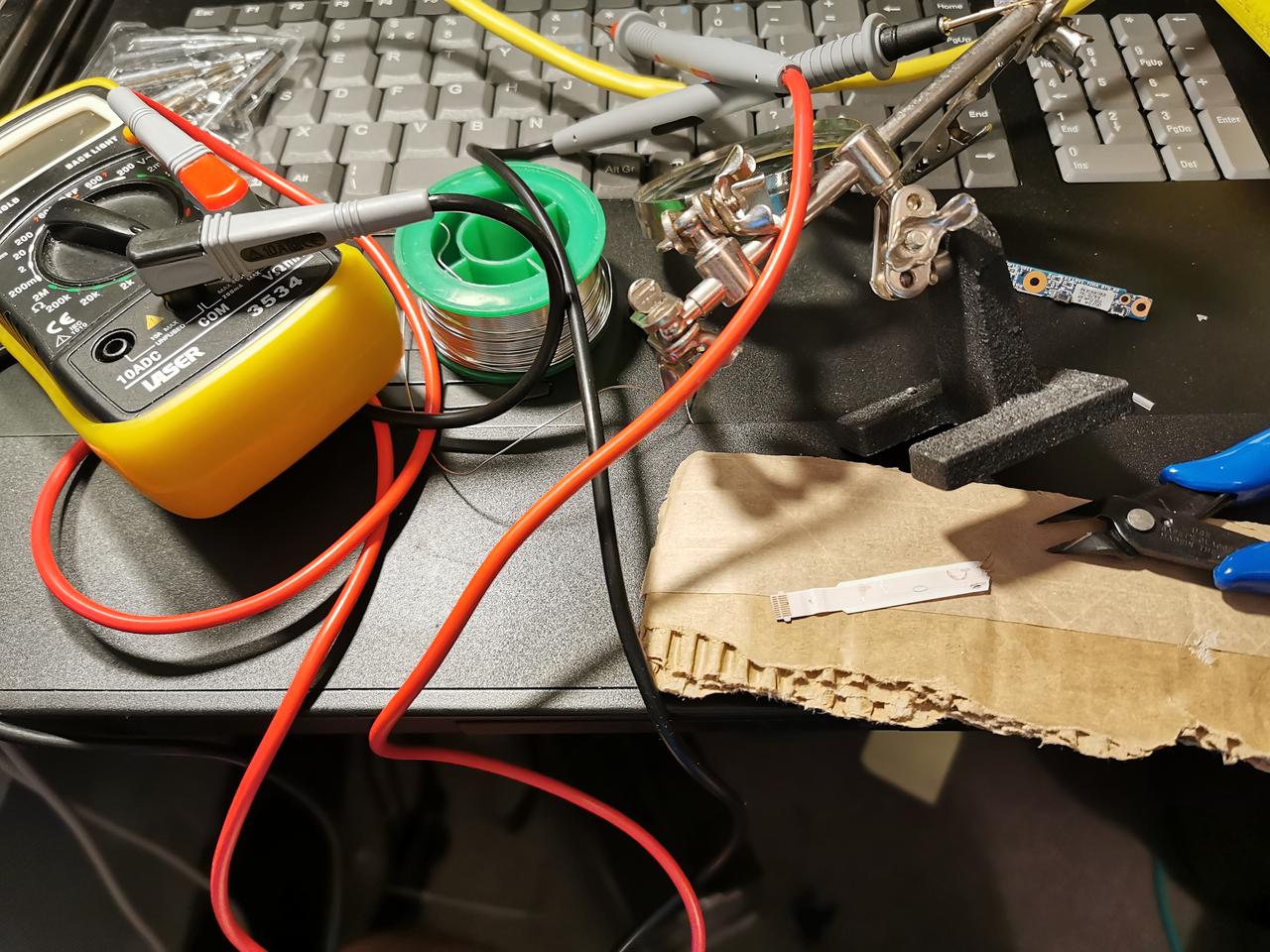

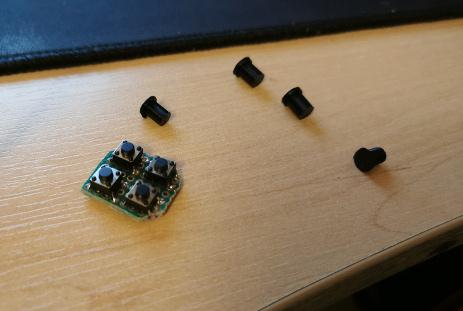

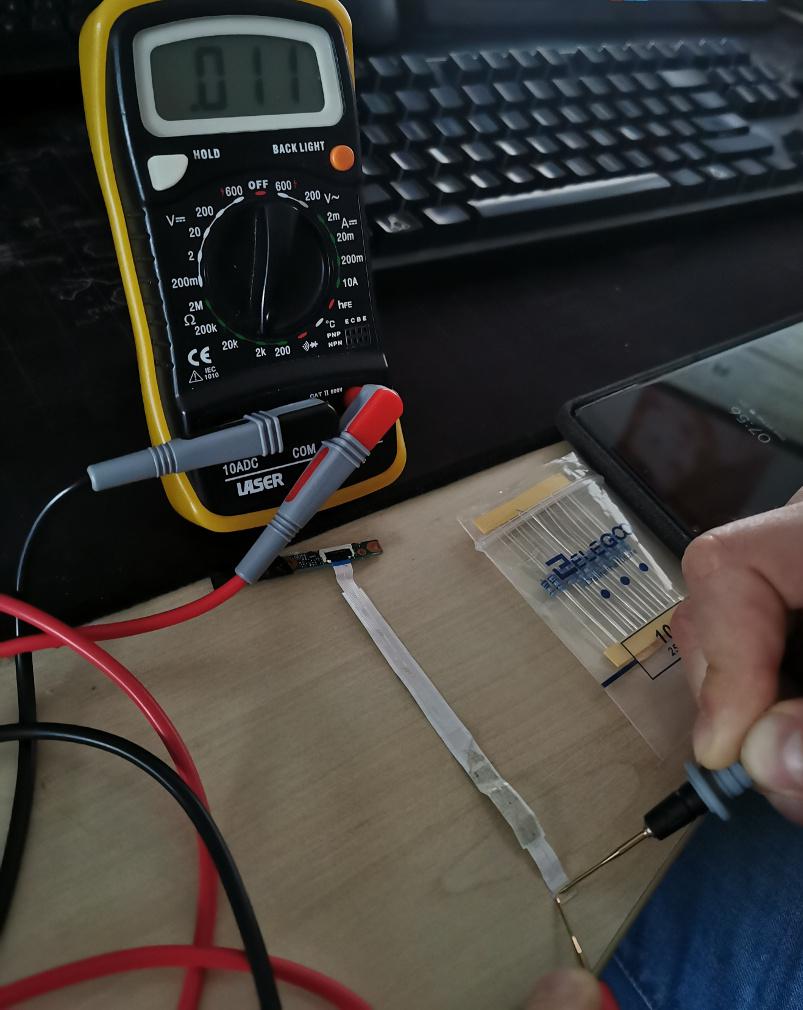

The assembly involved soldering components, integrating Arduino and servo motors for movement control, and programming the turret based on a project from the LittleFrenchKev website.

This initial phase was to explain basic electronics and programming to my son.

The fun

From Manual to Autonomous: The Leap into AI

After playing with the manually controlled turret, I realised the potential to make it autonomous and "intelligent."

The challenge was to enable the turret to recognise and target objects autonomously.

With a background in basic OpenCV and Tesseract from a decade ago, I decided to delve deeper into machine learning.

The Decision: Why Jetson Nano?

The offline Image recognitions options had to involve specialised hardware options were: Jetson Nano, Google Coral, and Intel CS.

I chose Jetson Nano for its balance of accessibility, power, and community support.

With its AI/ML Jetpack and 128-core GPU, Jetson Nano is the perfect fit for someone reentering the world of AI and machine learning.

The Upgrade: Image Recognition and Servo Control

Initially, I planned to use a Kinect camera for its depth-sensing capabilities.

However, I encountered compatibility issues with the Python version of the Jetson Nano Nvidia-customised ubuntu version.

So, I switched to a full HD Logitech camera. I asked ChatGPT for help in using the camera for person detection and received comprehensive guidance, including code snippets and suggestions to use Nvidia's pre-trained models for person detection.

https://chat.openai.com/share/1120521a-1042-4971-9e33-a852b85178f5

https://chat.openai.com/share/8ace3024-bcc9-44c1-a08a-7a5658a0e3e3

The implementation succeeded – the turret could now recognise a person using the Logitech camera.

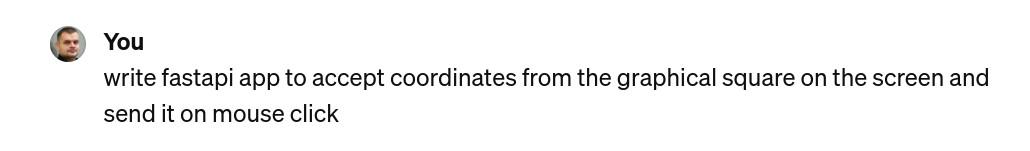

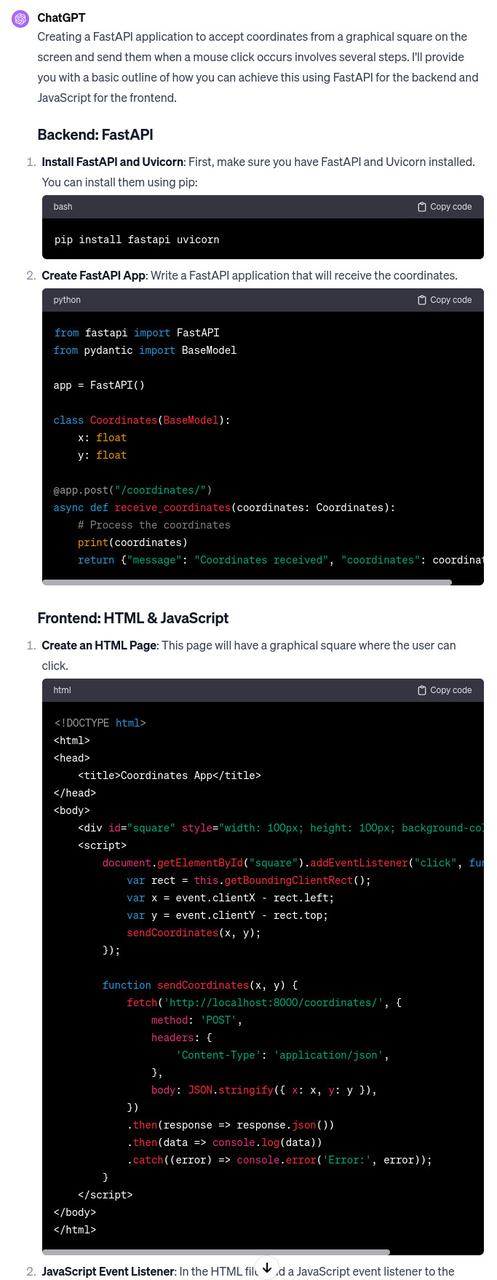

Integrating AI with Mechanics

The next step was to translate the detected coordinates into servo movements.

I slightly modified the existing application to transform it into a web API that could accept coordinates.

This meant that the turret was not just a passive observer but could actively interact with its environment.

To add an interactive element, I created a simple HTML interface where the turret's movement followed the mouse cursor. A click would prompt the turret to "shoot."

WITH HELP OF CHATGPT!

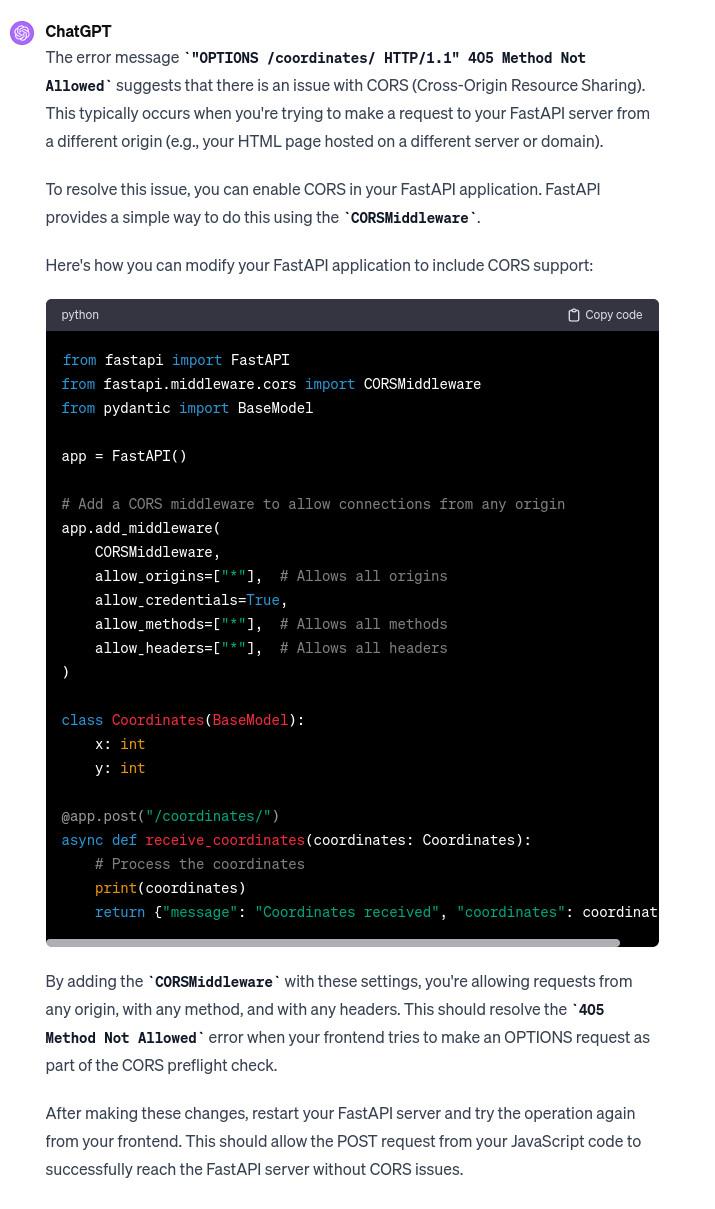

After launching and accessing application of course I had some CORS errors

but if I didnt know whats CORS I could still just paste error to get answer from chatgpt -SCARY!

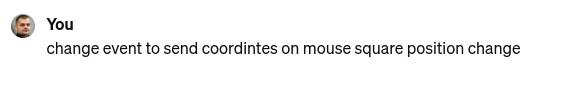

Later I wanted the turret to follow my mouse live. NOTE If we are without Architectual mind sending mouse coordinates changes through http API is crazy this would load server- but hapilly Chatgpt TELLS US ABOUT IT!!! just in case we have no infrastructure application workflow experience.)

What it didnt suggest is that for this specific usecase best would be to use websockets.

https://chat.openai.com/share/10b53b4b-d5df-41f7-9723-abde7da934e9

This feature made the turret a demonstration of AI and robotics and an engaging toy.

Conclusion: More Than Just a Toy

This project was a journey through various domains:

3D printing

electronics

programming

AI

robotics

It was a learning experience fueled by curiosity and the desire to create something unique. The Nerf turret, now equipped with AI capabilities, stands as a testament to the power of open-source projects and the accessibility of modern technology. What started as a fun project with my son became a gateway into the fascinating world of AI and robotics, demonstrating that anyone can step into the world of DIY AI projects with curiosity and the right tools.

Python antipatterns

Global variables antipattern

Global variables make code harder to reason about, test, and debug. Instead, use local variables or pass variables as function arguments.

Mutating arguments antipattern

Modifying arguments passed to a function can lead to unintended side effects and make code harder to understand. Instead, create a copy of the argument and modify the copy. In Python, mutating arguments means modifying the value of an argument passed to a function. Here's an example of a function that mutates an argument

def add_one(numbers): for i in range(len(numbers)): numbers[i] += 1 return numbers original_numbers = [1, 2, 3] new_numbers = add_one(original_numbers) print(original_numbers) # Output: [2, 3, 4] print(new_numbers) # Output: [2, 3, 4]

In this example, the add_one function takes a list of numbers as an argument. The function uses a for loop to iterate over the list and add 1 to each element. When the add_one function is called with the original_numbers list, the function modifies the list in place by adding 1 to each element.

The problem with this approach is that modifying the original list can lead to unintended side effects and make code harder to understand. To avoid this issue, it's better to create a new list inside the function and return the new list without modifying the original list. Here's an example of how to do this:

def add_one(numbers): new_numbers = [num + 1 for num in numbers] return new_numbers original_numbers = [1, 2, 3] new_numbers = add_one(original_numbers) print(original_numbers) # Output: [1, 2, 3] print(new_numbers) # Output: [2, 3, 4]

In this updated example, the add_one function creates a new list new_numbers by using a list comprehension to add 1 to each element of the numbers list. The function then returns the new list without modifying the original list. This approach is safer and makes the code easier to understand and maintain.

Using eval() or exec() antipattern

Using eval() or exec() can be dangerous and allow arbitrary code execution. Instead, use safer alternatives like ast.literal_eval() or subprocess. In Python, eval() and exec() are built-in functions that allow you to execute dynamic code. However, using them can be risky and potentially dangerous if not used properly. Here are some considerations when using eval() or exec():

Security risks: Using eval() or exec() with untrusted input can lead to security vulnerabilities. If the input contains malicious code, it can be executed with the same privileges as the program itself, which can potentially harm the system.

Debugging issues: When using eval() or exec(), it can be difficult to debug issues that arise. The code is executed at runtime, which makes it harder to pinpoint the source of errors.

Performance impact: Using eval() or exec() can have a performance impact since the code is executed at runtime. If the code is executed frequently, it can slow down the program.

Readability: Code that uses eval() or exec() can be harder to read and understand since it's not immediately clear what the code will do at runtime.

Alternative solutions: In most cases, there are better and safer alternatives to using eval() or exec(). For example, instead of using eval() to execute a string as code, you can use a function that takes arguments and returns a value.

Here's an example of how to use eval():

In this example, the eval() function is used to evaluate the string 'x + y' as a Python expression. The values of x and y are substituted into the expression, and the result of the expression is returned. However, this code can be risky if the string 'x + y' is supplied by user input since it can contain arbitrary code that can be executed with the same privileges as the program itself.

In general, it's best to avoid using eval() or exec() unless there is no other option. If you do need to use them, make sure to properly validate and sanitize input and limit the scope of execution as much as possible.

Not using with statements antipattern

Not using with statements for file I/O can lead to resource leaks and potential security vulnerabilities. Always use with statements to ensure that files are properly closed. In Python, the with statement is used to ensure that a resource is properly managed and released, even if an exception occurs while the code is executing. Not using the with statement can lead to bugs, resource leaks, and other issues. Here's an example of how to use the with statement:

with open('file.txt', 'r') as f: data = f.read() # do something with data # the file is automatically closed when the 'with' block is exited

In this example, the with statement is used to open the file 'file.txt' for reading. The code inside the with block reads the contents of the file into a variable data. When the block is exited, the file is automatically closed, even if an exception is raised while reading the file.

If you don't use the with statement to manage resources, you need to manually manage the resource yourself by opening and closing the resource explicitly. Here's an example of how to open and close a file without using the with statement:

In this example, the file is opened using the open() function and assigned to the variable f. The try block reads the contents of the file into a variable data. The finally block ensures that the file is closed after the try block is executed, even if an exception is raised.

While this approach works, using the with statement is generally considered to be cleaner and more readable. Additionally, the with statement ensures that the resource is properly managed and released, even if an exception is raised while the code is executing, making it more robust and less error-prone.

Ignoring exceptions

Ignoring exceptions can lead to hard-to-debug errors and security vulnerabilities. Always handle exceptions properly and provide meaningful error messages. Ignoring exceptions in Python can lead to bugs and unexpected behavior, and it is generally considered an antipattern. When an exception is raised, it is usually an indication that something has gone wrong and needs to be addressed. Ignoring the exception can mask the underlying problem and make it harder to diagnose and fix the issue.

Here's an example of ignoring an exception:

In this example, the try block contains code that may raise an exception. The except block catches any exception that is raised and ignores it, effectively doing nothing. This can lead to subtle bugs and unexpected behavior, as the exception may have important information about what went wrong.

Instead of ignoring exceptions, it's generally better to handle them in a meaningful way. Depending on the situation, you may want to log the exception, display an error message to the user, or take some other action to address the issue. Here's an example of handling an exception:

try: # some code that may raise an exception except SomeException as e: # handle the exception in a meaningful way log_error(e) display_error_message("An error occurred: {}".format(str(e)))

In this example, the except block catches a specific exception (SomeException) and handles it in a meaningful way. The exception is logged using a log_error() function, and an error message is displayed to the user using a display_error_message() function.

By handling exceptions in a meaningful way, you can make your code more robust and easier to maintain, as well as making it easier to diagnose and fix issues when they arise.

Overusing inheritance antipattern

Overusing inheritance can make code harder to understand and maintain. Instead, favor composition and use inheritance only when it makes sense. Inheritance is a powerful feature of object-oriented programming that allows one class to inherit the properties and methods of another class. However, overusing inheritance can lead to code that is difficult to understand and maintain. Here is an example of overusing inheritance in Python:

class Animal: def __init__(self, name, species): self.name = name self.species = species def move(self): print(f"{self.name} is moving") class Dog(Animal): def __init__(self, name): super().__init__(name, "dog") def bark(self): print("Woof!") class Cat(Animal): def __init__(self, name): super().__init__(name, "cat") def meow(self): print("Meow!") class GermanShepherd(Dog): def __init__(self, name): super().__init__(name) self.breed = "German Shepherd" class Siamese(Cat): def __init__(self, name): super().__init__(name) self.breed = "Siamese" class Mutt(Dog): def __init__(self, name): super().__init__(name) self.breed = "Mutt"

In this example, the Animal class is the base class, and it has two subclasses, Dog and Cat, which add the bark and meow methods respectively. Then, there are three more subclasses, GermanShepherd, Siamese, and Mutt, which inherit from Dog.

While this code may seem fine at first glance, it actually suffers from overuse of inheritance. The GermanShepherd, Siamese, and Mutt classes do not add any new functionality beyond what is already present in the Dog class. This means that the Dog class is being used as a sort of catch-all superclass for all dog breeds, which makes the code harder to understand and maintain.

A better approach would be to use composition instead of inheritance. For example, each dog breed could be its own class, with a Dog object inside it to provide the common functionality. This would make the code more modular and easier to reason about.

Hardcoding configuration values and paths antipattern

Hardcoding configuration values can make code harder to reuse and maintain. Instead, use environment variables or configuration files to store configuration values. Hardcoding paths to files and directories in your code can make it difficult to deploy your code to different environments. Hardcoding configuration values and paths in Python can make your code inflexible and difficult to maintain. If a configuration value or path changes, you'll have to update your code to reflect the change, which can be time-consuming and error-prone. Additionally, hardcoding values can make it harder to reuse your code in different contexts or with different requirements.

To avoid hardcoding configuration values and paths in Python, you can use configuration files or environment variables. Configuration files can be used to store key-value pairs, which can be read into your Python code at runtime. Environment variables can be used to set values that your code can access through the os.environ dictionary.

Here's an example of using a configuration file to store database connection information:

import configparser config = configparser.ConfigParser() config.read('config.ini') db_host = config['database']['host'] db_port = config['database']['port'] db_user = config['database']['user'] db_password = config['database']['password'] # use the database connection information to connect to the database

In this example, the database connection information is stored in a configuration file called config.ini. The ConfigParser class is used to read the configuration file into a dictionary, which is then used to retrieve the database connection information.

Here's an example of using environment variables to store a path:

import os data_path = os.environ.get('MY_DATA_PATH', '/default/data/path') # use the data path in your code

In this example, the os.environ dictionary is used to retrieve the value of the MY_DATA_PATH environment variable. If the variable is not set, a default value of /default/data/path is used. By using configuration files or environment variables to store configuration values and paths, you can make your code more flexible and easier to maintain. If a configuration value or path changes, you only need to update the configuration file or environment variable, rather than modifying your code. Additionally, configuration files and environment variables make it easier to reuse your code in different contexts or with different requirements.

Duplicated code antipattern

Duplicated code, also known as "code smells," is a common problem in software development that occurs when the same or similar code appears in multiple places within a codebase. Duplicated code can make the codebase more difficult to maintain, as changes may need to be made in multiple places. Here is an example of duplicated code in Python:

def calculate_area_of_circle(radius): pi = 3.14159265359 area = pi * (radius ** 2) return area def calculate_area_of_rectangle(length, width): area = length * width return area def calculate_area_of_triangle(base, height): area = 0.5 * base * height return area def calculate_circumference_of_circle(radius): pi = 3.14159265359 circumference = 2 * pi * radius return circumference def calculate_perimeter_of_rectangle(length, width): perimeter = 2 * (length + width) return perimeter def calculate_perimeter_of_triangle(side1, side2, side3): perimeter = side1 + side2 + side3 return perimeter

In this example, the code to calculate the area and perimeter/circumference of different shapes is duplicated. This can be refactored to remove the duplication by creating a Shape class with methods for calculating area and perimeter/circumference:

class Shape: def __init__(self): self.pi = 3.14159265359 def calculate_area_of_circle(self, radius): area = self.pi * (radius ** 2) return area def calculate_area_of_rectangle(self, length, width): area = length * width return area def calculate_area_of_triangle(self, base, height): area = 0.5 * base * height return area def calculate_circumference_of_circle(self, radius): circumference = 2 * self.pi * radius return circumference def calculate_perimeter_of_rectangle(self, length, width): perimeter = 2 * (length + width) return perimeter def calculate_perimeter_of_triangle(self, side1, side2, side3): perimeter = side1 + side2 + side3 return perimeter

This refactored code consolidates the duplicate code into a single class, which can be used to calculate the area and perimeter/circumference of various shapes. This makes the code more modular, easier to maintain, and reduces the likelihood of introducing errors when updating or modifying the code.

Not using functions classes or exceptions antipattern

Functions are a powerful tool that can help you to organize your code and make it more readable and maintainable. Not using functions can make your code more difficult to understand and to debug. Classes are a powerful tool that can help you to create reusable objects. Not using classes can make your code more difficult to understand and to maintain. Exceptions are a powerful tool that can help you to handle errors gracefully. Not using exceptions can make your code more difficult to use and to debug.

Using print for debugging antipattern

Using print statements for debugging can make it harder to debug and maintain code. Instead, use a debugger like pdb or ipdb to step through code and inspect variables.

Not using type annotations antipattern

Python 3 introduced type annotations, which can help catch bugs at compile-time and make code more self-documenting. Not using type annotations can lead to code that is harder to understand and maintain. Not using type annotations in Python can make your code harder to read, understand, and maintain. Type annotations allow you to specify the types of function arguments and return values, which can help catch bugs early, improve code clarity, and make it easier for others to use and understand your code.

Here's an example of a function with type annotations:

In this example, the add_numbers function takes two arguments, x and y, both of which are expected to be integers. The function returns an integer as well. By using type annotations, you can make it clear to anyone reading your code what types of arguments the function expects and what type of value it returns.

Type annotations can also be used for class attributes and instance variables. Here's an example:

class Person: name: str age: int def __init__(self, name: str, age: int): self.name = name self.age = age

In this example, the Person class has two attributes, name and age, both of which are expected to be of specific types. By using type annotations for class attributes and instance variables, you can make it clear to anyone using your class what types of values they should provide.

Type annotations can be especially useful in larger codebases or when working on a team, as they can help catch type-related bugs early and make it easier for team members to understand each other's code.

To use type annotations in Python, you'll need to use Python 3.5 or later. Type annotations are not enforced by the Python interpreter, but you can use tools like mypy to check your code for type-related errors at runtime.

Not using f-strings antipattern

Python 3.6 introduced f-strings, which provide an easy and concise way to format strings. Not using f-strings can make code harder to read and maintain.

Not using f-strings in Python can make your code less readable and harder to maintain. f-strings are a powerful feature introduced in Python 3.6 that allow you to easily format strings with variables or expressions.

Here's an example of a string formatting without f-strings:

In this example, we're using the % operator to format the string with the variables name and age. While this method works, it can be confusing and error-prone, especially with complex formatting.

Here's the same example using f-strings:

In this example, we're using f-strings to format the string with the variables name and age. F-strings allow us to embed expressions inside curly braces {} within a string, making the code more concise and easier to read.

F-strings also allow for complex expressions, making them more versatile than other string formatting methods. Here's an example:

In this example, we're using an f-string to format the string with the variables num1 and num2, as well as an expression to calculate their sum.

In summary, using f-strings in Python can make your code more readable, concise, and easier to maintain. F-strings are a powerful feature that allows you to format strings with variables and expressions in a more intuitive and error-free way.

Not using enumerate antipattern

Not using enumerate to loop over a sequence and get both the index and value can make code harder to read and maintain. Instead, use enumerate to loop over a sequence and get both the index and value.

Using enumerate in Python can make your code more readable and easier to maintain. enumerate is a built-in Python function that allows you to loop over an iterable and keep track of the index of the current element.

Here's an example of using enumerate to loop over a list and keep track of the index:

fruits = ['apple', 'banana', 'orange'] for index, fruit in enumerate(fruits): print(f'Fruit {index}: {fruit}')

In this example, we're using enumerate to loop over the fruits list and keep track of the index of each fruit. The enumerate function returns a tuple with the index and the value of each element, which we're unpacking into the variables index and fruit. We then print a formatted string that includes the index and the value of each element.

Using enumerate can make your code more readable and easier to understand, especially when you need to loop over an iterable and keep track of the index. Without enumerate, you would need to manually create a counter variable and increment it in each iteration of the loop, which can be error-prone and make the code harder to read.

Here's an example of achieving the same result as the previous example without using enumerate:

daa

fruits = ['apple', 'banana', 'orange'] index = 0

- for fruit in fruits:

print(f'Fruit {index}: {fruit}') index += 1

In this example, we're manually creating a counter variable index and incrementing it in each iteration of the loop. The resulting output is the same as the previous example, but the code is longer and harder to read.

In summary, using enumerate in Python can make your code more readable and easier to maintain, especially when you need to loop over an iterable and keep track of the index. Using enumerate can also help you avoid errors and make your code more concise.

Not using context managers antipattern

Not using context managers can lead to resource leaks and potential security vulnerabilities. Always use context managers to ensure that resources are properly closed.

Not using the else clause with for and while antipattern

In Python, you can use the else clause with a for or while loop to specify a block of code that should be executed if the loop completes normally without encountering a break statement. This can be a powerful tool for creating more robust and reliable code.

Here's an example of using the else clause with a for loop:

In this example, we're using a for loop to print the values 0 through 4. After the loop completes, we're using the else clause to print a message indicating that the loop completed normally. If we had used a break statement inside the loop to exit early, the else clause would not be executed.

Here's an example of using the else clause with a while loop:

In this example, we're using a while loop to print the values 0 through 4. After the loop completes, we're using the else clause to print a message indicating that the loop completed normally. Again, if we had used a break statement inside the loop to exit early, the else clause would not be executed.

Using the else clause with a for or while loop can make your code more robust and reliable, especially when you need to ensure that the loop completes normally without encountering errors or unexpected conditions. By providing a block of code to be executed only if the loop completes normally, you can create more robust and maintainable code.

Not using the else clause with for and while can make code harder to read and maintain. Instead, use the else clause with for and while to execute code when the loop completes normally.

Using list as a default argument value antipattern

Using list as a default argument value can lead to unexpected behavior when the list is modified. Instead, use None as the default argument value and create a new list inside the function if needed. In Python, you can use a list as a default argument value in a function. While this can be useful in some cases, it can also lead to unexpected behavior if you're not careful.

Here's an example of using a list as a default argument value:

In this example, we have a function add_item that takes an item argument and an optional lst argument, which defaults to an empty list. The function appends the item to the lst and returns the updated list. We then call the function twice, once with the argument 1 and once with the argument 2.

The output of this code is:

This behavior may be surprising if you're not expecting it. The reason for this is that Python only evaluates the default argument value once, when the function is defined. In this case, the default value for lst is an empty list, which is created once when the function is defined. Each time the function is called without a value for lst, the same list object is used and modified by the function.

To avoid this issue, you can use None as the default value for the argument and create a new list inside the function if the argument is None. Here's an example of how to do this:

def add_item(item, lst=None): if lst is None: lst = [] lst.append(item) return lst print(add_item(1)) print(add_item(2))

In this example, we're checking if lst is None inside the function and creating a new list if it is. This ensures that a new list is created each time the function is called without a value for lst.

In summary, using a list as a default argument value in Python can lead to unexpected behavior if you're not careful. To avoid this, you can use None as the default value and create a new list inside the function if the argument is None. This ensures that a new list is created each time the function is called without a value for the argument.

Python best practicies

Follow the PEP 8

PEP 8 is the official style guide for Python code. It provides guidelines for writing readable and maintainable code. Following this guide can make your code more consistent and easier to read for other developers.

Read changelog and new features when new version is released

It's good to know what was changed in new python releases.For instance very useful f strings which were introduced in 3.6

Use descriptive variable names

Your variable names should be descriptive and reflect the purpose of the variable. This can make your code easier to understand and maintain. In Python, using descriptive variable names is an important aspect of writing clean and readable code. Descriptive names can make your code more understandable, easier to read, and easier to maintain. Here are some guidelines for using descriptive variable names in your Python code:

Use meaningful and descriptive names: Variable names should reflect the purpose and use of the variable in your code. Avoid using single-letter names or generic names like temp or data. Instead, use names that describe the value or purpose of the variable, such as num_items or customer_name.

Use consistent naming conventions: Use a consistent naming convention throughout your code. This can make your code easier to read and understand. For example, you can use camel case for variable names (firstName) or underscores for function and variable names (first_name).

Avoid using reserved keywords: Avoid using reserved keywords as variable names. This can lead to syntax errors and make your code harder to read. You can find a list of reserved keywords in Python in the official documentation.

Use plural names for collections: If a variable represents a collection of values, use a plural name to indicate that it is a collection. For example, use users instead of user for a list of users.

Use descriptive names for function arguments: Use descriptive names for function arguments to make it clear what values the function expects. For example, use file_path instead of path for a function that takes a file path as an argument.

Here's an example of using descriptive variable names in a Python function:

def calculate_average(numbers): total = sum(numbers) count = len(numbers) average = total / count return average

In this example, we're using descriptive names for the variables in the function. numbers represents a collection of numbers, total represents the sum of the numbers, count represents the number of numbers in the collection, and average represents the average of the numbers.

Using descriptive variable names can make your Python code more readable and understandable. By following these guidelines, you can write code that is easier to read, easier to maintain, and less error-prone.

Write modular and reusable code

Divide your code into small, reusable functions and modules. This can make your code more maintainable and easier to test. Modular and reusable code is an important aspect of software development, as it allows for efficient and maintainable code. In Python, there are several ways to achieve this.

Functions: Functions are a way to encapsulate a set of instructions that can be reused throughout the code. They can take arguments and return values, making them versatile and adaptable to different use cases.

Example:

Classes: Classes allow for the creation of objects that can be reused throughout the code. They encapsulate data and functionality, providing a blueprint for creating multiple instances of the same object.

Example:

class Rectangle: def __init__(self, width, height): self.width = width self.height = height def area(self): return self.width * self.height rect1 = Rectangle(2, 3) rect2 = Rectangle(4, 5) print(rect1.area()) # output: 6 print(rect2.area()) # output: 20

Modules: Modules are files that contain Python code and can be imported into other files. They allow for the reuse of code across multiple projects and can be organized into packages for better organization.

Example:

# my_module.py def greeting(name): print(f"Hello, {name}!") # main.py import my_module my_module.greeting("Alice") # output: Hello, Alice!

Libraries: Python has a large number of libraries that can be used to accomplish common tasks, such as data analysis, web development, and machine learning. These libraries often provide modular and reusable code that can be incorporated into your own projects.

Example:

# Using the NumPy library to perform a vector addition import numpy as np vector1 = np.array([1, 2, 3]) vector2 = np.array([4, 5, 6]) result = vector1 + vector2 print(result) # output: [5 7 9]

By using functions, classes, modules, and libraries, you can create modular and reusable code in Python that can be easily maintained and adapted to different use cases.

Handle errors and exceptions

Always handle errors and exceptions in your code. This can prevent your code from crashing and provide better error messages for debugging. Properly handling exceptions is an important part of writing robust and reliable Python code. Here are some tips for handling exceptions in Python:

Use try-except blocks: When you have code that can potentially raise an exception, you should wrap it in a try-except block. This allows you to catch the exception and handle it gracefully.

Example:

try: x = int(input("Enter a number: ")) y = int(input("Enter another number: ")) result = x / y print(result) except ZeroDivisionError: print("You can't divide by zero!") except ValueError: print("Invalid input. Please enter a number.")

Be specific with exceptions: Catching a broad exception like Exception can hide bugs and make it difficult to understand what went wrong. It's better to catch specific exceptions that are likely to occur in your code.

Example:

try: f = open("myfile.txt") lines = f.readlines() f.close() except FileNotFoundError: print("File not found!")

Use finally blocks: If you need to perform some cleanup code, such as closing a file or releasing a resource, use a finally block. This code will always be executed, whether an exception is raised or not.

Example:

try: f = open("myfile.txt") lines = f.readlines() except FileNotFoundError: print("File not found!") finally: f.close()

Raise exceptions when appropriate: If you encounter a situation where the code cannot proceed because of some condition, raise an exception. This makes it clear what went wrong and allows the calling code to handle the error.

Example:

By following these tips, you can write Python code that handles exceptions properly, making your code more robust and reliable.

Use virtual environments or docker containers

Use virtual environments to isolate your project dependencies. This can prevent conflicts between different versions of packages and ensure that your code runs consistently across different environments. Python virtual environments and Docker are both tools that allow you to manage and isolate dependencies and configurations for your Python projects, but they serve different purposes and have different use cases.

Python virtual environments are used to create isolated environments with specific versions of Python and installed packages, independent of the system's global Python installation. This is useful when you have multiple Python projects with different dependencies, or when you need to test code on different versions of Python.

A virtual environment can be created using the venv module or other third-party tools like virtualenv. Once created, you can activate the environment to use the isolated Python interpreter and installed packages.

Example:

Docker, on the other hand, is a tool for creating and running containerized applications. A Docker container is a lightweight, portable, and self-contained environment that includes everything needed to run an application, including the operating system, runtime, libraries, and dependencies.

Docker containers are useful when you need to ensure that your application runs consistently across different environments, or when you need to deploy your application to different servers or cloud platforms.

To create a Docker container for a Python application, you would typically create a Dockerfile that specifies the dependencies and configurations for your application, and then build and run the container using the Docker CLI.

Example:

#Dockerfile FROM python:3.9-alpine WORKDIR /app COPY requirements.txt . RUN pip install --no-cache-dir -r requirements.txt COPY . . CMD ["python", "app.py"] #bash $ docker build -t myapp . $ docker run myapp

In summary, Python virtual environments and Docker are both useful tools for managing dependencies and configurations for your Python projects, but they serve different purposes and have different use cases. Virtual environments are useful for managing Python dependencies locally, while Docker is useful for creating portable and consistent environments for your applications.

Document your code

Document your code using comments, docstrings, and README files. This can make your code more understandable and easier to use for other developers. Python docstrings and README files are two ways to document your code and provide information to users and other developers about how to use and contribute to your code.

Docstrings are strings that are placed at the beginning of a function, module, or class definition to provide documentation about its purpose, arguments, and behavior. They can be accessed using the __doc__ attribute and can be formatted using various conventions such as Google, NumPy, and reStructuredText.

Here's an example of a simple docstring using the Google convention:

def greet(name: str) -> str: """Return a greeting message for the given name. Args: name: A string representing the name of the person. Returns: A string representing the greeting message. """ return f"Hello, {name}!"

README files, on the other hand, are documents that provide an overview of your project, its purpose, installation instructions, usage, and other important information. They are typically written in plain text or Markdown format and placed in the root directory of your project.

Here's an example of a simple README file:

# My Project My Project is a Python package that provides useful tools for data analysis. ## Installation To install My Project, run the following command: pip install myproject

## Usage

Here's an example of how to use My Project:

By using both docstrings and README files, you can provide comprehensive documentation for your code, making it easier for others to understand and use your code, and encourage contributions and collaborations. Write unit tests ---------------- Write unit tests for your code to ensure that it works as intended. This can catch bugs early and prevent regressions when you make changes to your code.

Use version control

Use version control, such as Git, to manage your code and collaborate with other developers. This can make it easier to track changes and revert to previous versions if necessary. Gitflow is a branching model for Git that provides a structured workflow for managing branching and merging in software development projects. It was first introduced by Vincent Driessen in 2010 and has since become a popular model for managing Git repositories.

Gitflow defines a specific branch structure and set of rules for creating, merging, and managing branches. The main branches in Gitflow are:

master branch: The master branch contains the production-ready code, and should only be updated when new features are fully tested and ready to be released.

develop branch: The develop branch is used for integrating new features and bug fixes into the main codebase. All new development should happen on this branch.

In addition to these main branches, Gitflow defines several supporting branches, including:

Feature branches: Feature branches are used for developing new features or making changes to the codebase. They are created from the develop branch and are merged back into develop when the feature is complete.

Release branches: Release branches are used for preparing a new release of the code. They are created from the develop branch and are merged into both master and develop when the release is ready.

Hotfix branches: Hotfix branches are used for fixing critical bugs in the code. They are created from the master branch and are merged back into both master and develop when the hotfix is complete.

By using Gitflow, development teams can better organize their code, collaborate more effectively, and manage their projects more efficiently. It provides a clear structure for managing branches and releases, and ensures that changes to the codebase are properly tested and integrated before they are released to production.

Avoid magic numbers and strings

Avoid using magic numbers and strings in your code. Instead, define constants or variables to represent these values. This can make your code more readable and maintainable. In Python, "magic numbers" and "magic strings" are hard-coded values that appear in your code without any explanation of what they represent. These values are problematic because they can make your code harder to understand, maintain, and modify.

To avoid using magic numbers and strings in your Python code, you can define constants or enums instead. Constants are variables that hold a fixed value, while enums are special classes that allow you to define a set of named values.

Here's an example of using constants instead of magic numbers in Python:

# Bad: using magic numbers def calculate_discount(price): if price > 100: return price * 0.9 else: return price * 0.95 # Good: using constants DISCOUNT_THRESHOLD = 100 DISCOUNT_RATE_HIGH = 0.9 DISCOUNT_RATE_LOW = 0.95 def calculate_discount(price): if price > DISCOUNT_THRESHOLD: return price * DISCOUNT_RATE_HIGH else: return price * DISCOUNT_RATE_LOW

Similarly, you can use enums to define a set of named values, which can make your code more readable and maintainable. Here's an example of using enums in Python:

from enum import Enum # Bad: using magic strings def get_status_code(status): if status == "success": return 200 elif status == "error": return 500 else: return 400 # Good: using enums class StatusCode(Enum): SUCCESS = 200 ERROR = 500 BAD_REQUEST = 400 def get_status_code(status): if status == StatusCode.SUCCESS.name: return StatusCode.SUCCESS.value elif status == StatusCode.ERROR.name: return StatusCode.ERROR.value else: return StatusCode.BAD_REQUEST.value

By using constants or enums instead of hard-coded values in your code, you can make it easier to read, understand, and modify, which can ultimately save you time and effort in the long run.

Use list and dict comprehensions

Use list comprehensions instead of for loops to create lists. This can make your code more concise and readable. In addition to list comprehensions, Python also supports dict comprehensions, which allow you to create new dictionaries from existing iterables using a similar syntax.

List Comprehensions

List comprehensions provide a concise way to create new lists from existing iterables. They consist of an iterable, a variable representing each element of the iterable, and an expression to manipulate the variable. Here are some examples of list comprehensions:

# Create a list of squares of the first ten integers squares = [x ** 2 for x in range(1, 11)] # Create a list of only the even numbers from a given list numbers = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10] even_numbers = [x for x in numbers if x % 2 == 0] # Create a list of words longer than three characters from a string sentence = "The quick brown fox jumps over the lazy dog" words = sentence.split() long_words = [word for word in words if len(word) > 3]

Dict Comprehensions

Dict comprehensions work similarly to list comprehensions, but instead of creating a list, they create a new dictionary. They consist of an iterable, a variable representing each element of the iterable, and expressions to create the keys and values of the new dictionary. Here are some examples of dict comprehensions:

# Create a dictionary of squares of the first ten integers squares_dict = {x: x ** 2 for x in range(1, 11)} # Create a dictionary from two lists keys = ['a', 'b', 'c'] values = [1, 2, 3] dict_from_lists = {keys[i]: values[i] for i in range(len(keys))} # Create a dictionary from a list of tuples tuple_list = [('a', 1), ('b', 2), ('c', 3)] dict_from_tuples = {key: value for key, value in tuple_list}

List and dict comprehensions are powerful tools that can help you write more expressive and readable code in Python. They can simplify complex operations and make your code more efficient and concise.

Use type annotations

Python3 introduced support for type annotations, which can help improve the readability and maintainability of your code. By specifying the expected types of function arguments and return values, you can catch errors earlier in the development process. Python 3 introduced type annotations, which allow you to declare the expected type of function arguments and return values. Type annotations can help catch errors at compile time, make your code more readable, and improve code completion in development environments.